Abstract

In the fast-paced and complex world of cybersecurity, traditional threat detection methods based on static Indicators of Compromise (IoCs) are increasingly ineffective. IoCs suffer from short lifespans, high false positive rates, and lack of context, limiting their value in detecting sophisticated and evolving attacks. This talk delves into the transformative role of Cyber Threat Intelligence (CTI), behavioral analytics, and AI-powered enrichment pipelines in reshaping threat detection from a reactive, indicator-driven exercise into a proactive, context-rich process. By leveraging diverse AI models, including Variational Autoencoders for anomaly detection, Temporal Convolutional Networks combined with Bidirectional GRUs for behavioral sequence modeling, and Graph Neural Networks for relational learning, modern systems can uncover subtle and multi-stage attacks that evade traditional defenses.

Contextual enrichment techniques, such as natural language processing, risk scoring, and auto-tagging, further amplify detection capabilities by structuring and prioritizing raw telemetry into actionable intelligence. This integration reduces alert fatigue, accelerates triage, and enables security operations centers (SOCs) to respond faster and more effectively, even across multilingual threat landscapes. The talk also addresses ongoing challenges, including data bias, adversarial evasion, model interpretability, and pipeline complexity, highlighting active research aimed at overcoming these hurdles. Ultimately, the future of threat detection lies in learning the attacker's intent, building seamless feedback loops between intelligence and detection teams, and moving from overwhelming noise to clear, contextual signals, ushering in a new era where defenders dance with signals rather than chase shadows.

I. Introduction

In today’s rapidly evolving threat landscape, relying solely on Indicators of Compromise (IoCs) is no longer sufficient, detection without context is simply noise. Traditional IoC-based methods struggle with short lifespans, evasion tactics, and overwhelming false positives, leaving defenders with fragmented insights and delayed responses. As cyber attackers become more sophisticated, moving quickly and hiding in plain sight, security teams must move beyond chasing IPs and hashes in spreadsheets. This paper explores how the integration of Cyber Threat Intelligence (CTI), behavioral analytics, and AI-driven enrichment pipelines is reshaping detection into a smarter, adaptive, and deeply contextual process.

By correlating weak signals into coherent attack narratives and building feedback loops between intelligence and detection teams, organizations can uncover attacker intent rather than just isolated indicators. As noted by Gartner, “contextual threat intelligence increases detection accuracy by over 40\%,” underscoring the critical role of enriched, behavior-based detection in modern cybersecurity defense.

II. The real Limits of IoC-based detection in modern environments

A. IoCs as a starting point: What they are and why they remain a baseline in threat detection.

An Indicator of Compromise, also known as IoC, is an artifact usually collected after an attack. It is used by detection teams to later spot if the same attack is occuring in a network or on a computer. It can also be used by threat hunters to perform forensics or find new IoCs by pivoting on existing ones.

Their form varies, it can be an IP address, a FQDN, (Fully Qualified Domain Name) a file hash, etc.

The purpose of IoCs is simple, it is to be implemented in detection tools, to prevent a known attack from occurring again.

An IoC is normalized by the STIX standard (Structured Threat Information eXpression, defined in 2012-2013), simply labelled as an Indicator. Prior to that, there was no real format to exchange information, leading to implementation issues.

They are nowadays widely used, IoCs are spread by threat hunters, malware analysts, researchers and security vendors among the community.

IoCs are the baseline of threat detection due to the fact that they are the most immediate and actionable evidence that malicious activity is ongoing. They are easy to share and implement. Since the IoC-based detection is about matching values with indicators it remains simple and does not require as much resource as for instance AI-powered detection. It was considered the core of threat detection during many years and unfortunately still is to many.

B. Inherent technical constraints: Short lifespan, easy evasion, and high false positive rates.

However, IoCs do have many drawbacks and despite being useful, can cause a lot of strain on security tools and analysts. Their main problem is them being static. They don’t change, while attackers do. Hence, their lifespan is short. For IP addresses, it becomes obsolete within hours to a few days because they do rotate frequently. On the other hand, file hashes or certificates do have a lifespan of several weeks to months, but that remains quite short anyways. IoCs assume a relatively stable environment, but nowadays everything changes every time, with cloud workloads, containers and ephemeral systems. Cloud resources live for minutes to hours, which is less time than it needs for an IoC to spread. IoC-based detection struggles to keep up with dynamic asset inventories or auto-scaling infrastructures. Similarly, remote workforces, BYOD (Bring Your Own Device) policies, and SaaS (Security as a Service) adoption mean that endpoints and traffic routes change constantly. Some systems implement a decay scoring system to address this issue, but this approach has its limitations too.

The paper "Scoring model for IoCs by combining open intelligence feeds to reduce false positives" presents a method for improving the reliability of IP-based Indicators of Compromise (IoCs) by aggregating multiple open-source threat intelligence feeds and assigning a confidence-based score to each IoC. The authors analyze the independence and overlap of various feeds, apply a time-decay function to reflect the diminishing relevance of older IoCs, and evaluate each source based on features like extensiveness, timeliness, and whitelist overlap. These source scores are then used to compute a weighted average that prioritizes high-confidence feeds when determining the final IoC score. The approach aims to reduce false positives and better inform security decisions, though limitations such as sparse overlap between feeds and the need for improved parameter tuning remain areas for future improvement.

As their lifespan is short, it means that if an IoC is used for too long, it will start at some point creating false positives. This means analysts will spend a lot of time triaging alerts and identifying which ones are false positives and which ones are not, based on the relevelency of the IoC. An IP used by an attacker during an operation can later be given to a legitimate service leading to false detection.

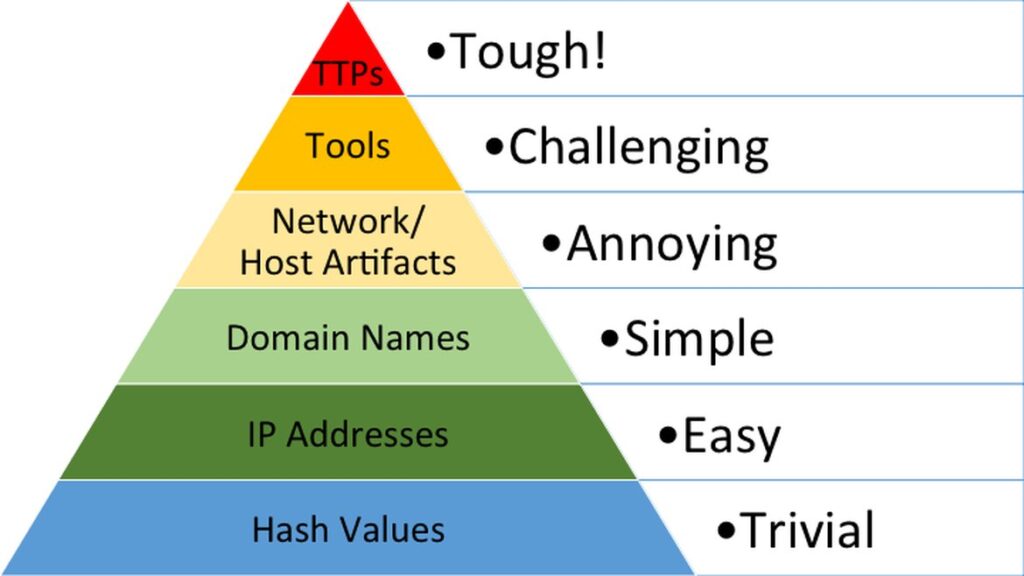

Also, it is very easy for attackers to evade IoC-based detection. Indeed, they just need to change their IP addresses or their FQDNs and the whole detection system fails. With polymorphic malwares, it is very unlikely a malware with the same hash is sent twice. David Bianco created the concept of Pyramid of Pain, a framework that prioritizes the types of IoCs and how painful it is for attackers to change their way of operating.

IoCs still remain important nonetheless because they force attackers to pay attention to the infrastructure and malwares they use.

C. Strategic blind spots: Lack of context, delayed relevance, and limited decision-making value.

IoCs are good for basic detection, but they lack something important: context.

Context is core in incident response or attribution. IoCs are data points, not stories. An IP address or file hash can tell you what was observed, but not why, how, or by whom. They lack intent, timing, origin, and impact, all of which are critical for effective response. Without context, you risk false positives, overreaction or wasted time. Responders need to know what the attacker did, what was affected, how they got in, etc. IoCs give hints but context is actionable. With context, it can be easy for a SOC to deprioritize an alert, or on the contrary, heavily focus on it. For example, if an IP is involved in a campaign targeting Linux users to steal data, it is not so important to deal with it if only Windows assets are monitored.

Another issue with IoCs is that they are often outdated even before they are published. Indeed, IoCs are published by researchers or hunters after an attack has occured, so detection is always one step behind. Attackers similarly monitor the cyberdefense community and are aware of the reports and IoCs that are published, so they know when their infrastructure is likely to be detected if they use it again in an attack.

Being dependent on external feeds to build your own detection can also be a problem. External feeds might not cover what you are really vulnerable to, they are not tailored to your needs and moreover, they can stop their activity at any time.

To tackle the delayed relevance of IoCs and the reliability of external sources, it is possible to do internal proactive threat hunting, to directly collect IoCs before they are even used in attacks. It is usually done by pivoting on existing IoCs, by identifying key characteristics of for instance a server, the open ports, the tls certificate fields, etc. It is then possible to find new domains or IoCs having the same characteristics, thus having a more proactive approach to threat detection. However, performing this research is hard, costly and does not always yield results. Advanced threat actors will also change their infrastructure completely for each attack, erasing any possible link to already used infrastructure.

It is possible to prioritize IoCs, but it is once again a complex task, because it’s all about gathering the context around them, such as mapping IoCs to threat actors or campaigns or correlating with your own attack surface or assets. Each IoC needs to have a confidence in its maliciousness, a severity of potential impact and the prevalence in your environment. This work is heavy and requires skilled people to perform. It is also hard to automate this process.

D. Scalability and integration issues: Volume overload, weak correlation, and poor fit for dynamic environments.

IoCs do have another drawback: there are a lot of them. Public feeds can produce tens of thousands to millions of IoCs per day. This is a major issue, because it creates a strain on security tools such as SIEMs, NDRs or EDRs that need to handle this massive flow of indicators, possibly overloading them. The more you collect indicators the less each individual IoC has value, it is one in a mass and it becomes very hard to prioritize them or triage the alerts. Storing them, even if you implement archiving mechanisms, is a burden in itself. Processing IoCs is not easily scalable and causes a lot of integration issues, volume overload being one of them. Internal teams usually lack the resources to enrich, tag or curate IoCs across platforms. Despite having standards like STIX, the different tools (SIEM, NDR, EDR) use different formats and ingestion methods. There is also the issue of duplication among sources, inconsistent quality and overlapping indicators, making automation difficult.

IoCs bring weak correlation as well. They are isolated signals and don’t tell the whole picture of what’s happening. Most tools are not tailored to correlate weak IoC signals across time and data types. An IP on its own is an alert. That same IP, tied to a phishing email and a credential dump, becomes an incident. This is where context is key, because context can bring correlation. If you know what this IoC is related to, it is easier to search for other related IoCs.

E. Workflow friction in CTI: Manual enrichment needs, limited behavioral mapping, and reactive posture.

Even when IoCs are well sourced and technically sound, they rarely flow smoothly into detection workflows. CTI teams often face structural and operational barriers that limit the value of their intelligence across the organization.

In a workflow, IoCs arrive as raw data. It takes analysts, and time to enrich them, as it can be at most semi-automated. It also requires analysts to master many different tools. It introduces delay between receiving intelligence and deploying detection logic. It is impractical at scale, and it is hard to triage and prioritize IoCs when they don’t already have context.

In such CTI workflow it is also hard to map IoCs to behavioral detection systems, such as the MITRE ATT\&CK framework, leading the alerts to only stay low-fidelity, without telling narratives. CTI teams may identify threats, but detection engineers struggle to translate that into rules or signatures without behavioral context. This creates a "lost-in-translation" effect between teams, CTI knows what, detection needs to know how.

Most IoCs are collected after-the-fact, following an incident, campaign, or breach. That means the detection strategy is always playing catch-up: indicators often arrive too late to be useful against fast-moving or one-off infrastructure. CTI teams spend time analyzing yesterday’s threat, while attackers have already evolved. This creates a reactive cycle, where defense adapts only after compromise, rather than through proactive hunting or predictive modeling.

III. Leveraging behavioral analytics and CTI tools for deeper threat visibility

A. Behavioral analysis outperforms IOCs by revealing attacker intent, not just indicators.

Behavioral analysis marks a fundamental shift in threat detection: it focuses on what adversaries do, rather than what they leave behind. While IoCs detect artifacts such as IPs, domains and hashes, behavioral detection identifies actions, tactics, and sequences that reflect the adversary’s goals, methods, and strategies. Behavioral analysis tracks sequences of actions, rather than isolated signals.

It outperforms IoCs-based detection on several aspects. First, attackers can evade IoCs, but not behaviors. Remember the pyramid of pain ? TTPs are among the hardest things for an attacker to change. Behaviors are detectable even if the indicators are new or unknown. It brings coverage for unknown threats too. Behavioral analysis evaluates patterns over time and in context. This multi-dimensional evaluation leads to higher fidelity alerts, and less false positives. It is also easier to map on frameworks like MITRE ATT\&CK. It is equivalently much easier to track down the attacker’s intents and thus take actions to protect yourself against those intents.

Behavioral analysis leads to a new concept, BIoCs, behavioral indicators of compromise. Instead of being artifacts, they are unusual access patterns, command-line anomalies, process trees, etc.

B. In practice, behavioral detection uncovers stealthy activity through patterns, not signatures.

Behavioral detection does not require any signature, only patterns. It is especially important for stealthy attacks that aim to stay under the radar. Techniques such as LOLBins (Living Off the Land Binaries), encoding payload or using temporary dynamic infrastructure can easily evade IoC-based detection, whereas they are still detected by patterns such as a domain admin logging in at 3AM from a foreign IP, a workstation reaching out to Tor or C2 (Command and Control) infrastructure after opening a spreadsheet or a container that suddenly spawns new privileged processes. Behavioral models can be customized, are resilient across malware variants and provide layered defense.

MITRE has developed a framework, CAR, MITRE Cyber Analytics Repository that lists many behavioral patterns to cover a lot of different techniques. For example, CAR-2019-04-004: Credential Dumping via Mimikatz is a comprehensive set of Splunk and LogPoint queries to detect this pattern.

C. CTI tools enrich detection with context, transforming isolated alerts into coherent attack narratives.

One of the core challenges in modern detection is that raw alerts, such as a flagged IP address or a suspicious hash, often appear as fragmented, isolated signals. On their own, these alerts are difficult to interpret and prioritize. This is where Cyber Threat Intelligence tools play a critical role: they enrich these signals with context, turning them into actionable intelligence and helping defenders see the bigger picture.

CTI tools aggregate, analyze, and contextualize a wide range of data sources, including public threat reports, dark web monitoring, malware analysis, and internal telemetry. By doing so, they enable organizations to:

-

Map IoCs to known threat actors, campaigns, or TTPs (tactics, techniques, and procedures). For example, if a suspicious domain appears in your environment and is known to be associated with a specific APT group, the context helps prioritize the incident and informs the likely intent or objective.

-

Link disparate alerts across time and data sources. A lone suspicious PowerShell execution might not mean much. But when CTI tools correlate it with an IP flagged in a recent ransomware campaign and a credential dump found on dark web forums, it becomes part of a coherent attack chain.

-

Enrich alerts with metadata. Information like threat actor profiles, first-seen timestamps, malware families, and attack timelines help analysts understand the "who," "why," and "when" behind an alert, which a raw indicator simply cannot convey.

CTI integration adds context, which allows to triage alerts more effectively, prioritize response efforts and support threat hunting and hypothesis generation. This integration can be done through tools, such as Threat Intelligence Platforms, enrichment plugins or thanks to standards like STIX or TAXII that allow an easy shareability of context and intelligence.

CTI tools turn low-fidelity detections into high-confidence investigations by connecting the dots. They help analysts understand not just what happened, but who is behind it, how it fits into a broader campaign, and why it matters, closing the gap between detection and strategic decision-making.

### D. Correlating weak, seemingly unrelated signals creates stronger, high-confidence detections.

In modern threat detection, individual signals often appear too weak or ambiguous to trigger meaningful action on their own. A single anomalous login, an obscure DNS request, or a low-severity endpoint alert might be dismissed as noise. But when these signals are correlated across time, users, systems, or data sources, they can expose sophisticated, stealthy attacks that would otherwise go unnoticed. Example 1 : Anomalous Login with Suspicious File Activity and External IP Contact

- Signal 1: A user logs in at 3:14 AM from a country where your company has no operations.

- Signal 2: Five minutes later, the same user accesses an HR folder and downloads several ZIP files

- Signal 3: Soon after, the user’s machine contacts an IP address previously flagged for data exfiltration activity.

Individually, these might be ignored:

- The login could be a VPN artifact.

- The file access might be routine.

- The IP contact might not hit a known blacklist.

Correlated together, these events tell a clear story of possible credential compromise and data theft, leading to a confident and prioritized incident response. Example 2: PowerShell Execution with Registry Modification with Beaconing Behavior

- Signal 1: PowerShell is executed with base64-encoded content.

- Signal 2: A registry key related to persistence is modified shortly after.

- Signal 3: The endpoint begins sending periodic outbound requests to an obscure domain every 60 seconds.

These are classic signs of initial access + persistence + C2 behavior, when correlated, they strongly indicate hands-on-keyboard attacker activity, even if no single IoC (e.g., file hash or IP address) is triggered. Example 3: Email Attachment with User Execution with Lateral Movement

- Signal 1: A phishing email with an Excel attachment bypasses spam filters.

- Signal 2: The recipient opens the file and enables macros, launching a script.

- Signal 3: Within minutes, the infected machine begins scanning other systems on the internal network.

This sequence reveals a classic initial compromise via phishing followed by lateral movement, a known playbook of threat actors like FIN7 or TA505. Correlation makes this an incident instead of three isolated low-priority events.

Why does it matter so much ? Correlating multiple weak signals surfaces high-value detection, without overwhelming analysts with alert fatigue. It also aligns with TTP modeling and it improves SOC efficiency. Tools like SIEMs are tailored to perform multi-source or multi-event correlation over time windows.

IV. Creating feedback loops between detection systems and intelligence workflows

A. The importance of collaboration across the cyber stack: Why feedback between CTI and detection teams is essential for adaptive defense.

Unfortunately in many organizations, the cyber stack components do not cooperate enough. CTI, detection engineering, SOC, and threat hunting teams operate in silos. This separation leads to many issues, such as missed connections, slower response time or even duplicated work. This is not a good basis for an adaptive defense. However, attackers do not wait and constantly change their modus operandi. If CTI spots a new TTP, but it is not directly implemented in detection tools, the work becomes almost useless.

Detection needs context, CTI needs visibility. Detection teams often struggle with alert triage due to lack of context, while CTI teams lack visibility into real-time detection outcomes, such as false positives or emerging patterns in alerts. Each team has what the other needs. This is why collaboration across the cyber stack is mandatory. When CTI and detection teams collaborate, they create virtuous cycles, intel informs better detection, detection results feed new intel. This adaptive loop makes the organization faster, smarter, and more resilient.

Cloud, SaaS, remote work, and ephemeral infrastructure demand fast, accurate detection. That accuracy depends on aligning telemetry with threat actor behaviors, which only happens when detection systems are tightly integrated with up-to-date intelligence

B. From intelligence to detection: How CTI insights refine detection rules, enrich alerts, and prioritize relevant threats.

Many organizations don’t see how CTI can impact their overall detection, far more than just with mere IoCs.

First, talking about pure detection rules, CTI sharpens what we look for. Indeed, CTI provides the content of what can be turned into sigma or YARA rules for instance. Without this information, detection teams will build generic detection rules that won’t fit the strategic needs. CTI helps focus detection efforts on what’s currently relevant, avoiding bloated rules that chase irrelevant or outdated threats. It is the same as going to a hunt without the adequate weapons for what we will encounter. Then, CTI is up to date with the new attacking methods and being able to transfer this knowledge to the detection team is key.

Second, CTI adds context that makes alerts actionable. Raw alerts without intelligence are often low-fidelity, hard to triage, and time-consuming. CTI transforms them into meaningful signals. When detection is based on IoCs or even patterns, CTI can link alerts to ongoing campaigns or known threat actors, giving the possibility to help SOC analysts to prioritize or escalate when needed. It can also provide more information as a context, such as first seen and last seen dates, actor timelines or geographical distribution. That information, among all the rest of CTI’s knowledge, can enable severity scoring which helps prioritize alerts and events as well. CTI can also help with identifying false positives by flagging IoCs as outdated, easing the strain on detection teams.

Finally, CTI helps defenders focus on what matters. CTI ensures that detection teams and SOCs are not treating all alerts equally, but focusing their resources on the most relevant, likely and damaging threats. CTI knows your threat landscape, and can help you align your detection with your region, sector, technical stack or known adversaries. Connecting alerts to strategic threats is key. CTI guides the detection to look for what is crucial in your own context. By knowing your attack surface, both external and internal, it is possible for the CTI team to provide actionable intelligence, tailored for those needs, such as exploited vulnerabilities affecting your company or campaigns targeting your sector. CTI can also support proactive hunting, by generating hypotheses on what might have happened or will happen before or after an alert has been raised.

Without CTI, detection is simply blind. Detection becomes reactive and static, dealing with outdated indicators. Rules are generic and produce false positives. Alerts all have an equal prioritization and overwhelm SOC analysts, leading to resources being misallocated and gaps in visibility remain hidden.

C. From detection to intelligence: How alerts, false positives, and hunting results feed back into threat modeling and intel enrichment.

Even if CTI knows a lot of things, it is far from being omniscient. It depends on real world telemetry, provided by detection systems. They are the ones operating the closest to the threat surface, they capture raw activity, anomalies and attacker footprints. Without feedback from the field, CTI remains theoretical.

About threat modeling, detection results help CTI validate whether a threat actor or technique is actually being observed in the wild targeting the victim’s infrastructure. Telemetry can as well help identify previously unknown or undocumented threat actors. CTI can also focus its research on what is actually targeting your company, not what should be targeting it. It additionally helps CTI produce more actionable intelligence products, such as internal reports based on evidence, not assumptions. Detection logs and hunting can further help discover new infrastructure, malware variants or TTPs previously missed.

Threat hunting also fuels CTI discovery. Internal telemetry is a gold mine to discover suspicious artifacts, lateral movement techniques or stealthy behaviors not yet seen in public reporting. It is internal intelligence. CTI can then start investigations over this intelligence, possibly pivoting and finding new IoCs or behavior patterns to enhance its knowledge. It can help CTI with its hypotheses of what is coming next or what happened before too.

The false positive rates also help refine the CTI intelligence. Maybe the source was not trustworthy, or the collected intelligence was too broad. It improves intelligence quality by retiring stale IoCs, updating scoring and eventually distinguishing high-fidelity indicators from noisy ones. It again helps CTI understand what is legitimate in the infrastructure, despite it being a look-alike of an attacker’s technique. It also reduces the noise in both directions by retiring low-value indicators or deprioritizing irrelevant TTPs.

D. Making feedback loops operational: Practical steps to automate, integrate, and institutionalize intelligence–detection collaboration.

As we have seen, having operational feedback loops between CTI and detection is fundamental for a proper detection.

First, it is important to have communication channels across the cyber stack, whether it is regular synchronisation points or using a shared tickets or collaboration board (such as Jira). For example, when detection flags a suspicious PowerShell command, a SOC analyst can create a ticket or mention it during a meeting so that CTI can directly start investigating potential linked threat actors or ongoing campaigns.

Then, it is key to have automated flows. Intelligence should be directly implemented into detection tools, with tools such as MISP to push IoCs or TTPs in SIEM, EDR or NDR. Collections can be made and retrieved when needed and in the right context (YARA rules for fine analysis sandboxes, Sigma rules to a SIEM etc). The other way around, detection to intelligence feedback has to be automated too. Detection platforms should tag and export alerts, false positive and unknown patterns to CTI lands for enrichment. Telemetry export tools such as Splunk forwarders or Sentinel playbooks should send suspicious event metadata to CTI queues too. Hunting findings must also be sent to the CTI tool, submitting artifacts for actor correlation and investigation.

APIs should be used to connect TIPs with detection tools and hunting platforms. Standards like STIX, TAXII, MITRE ATT\&CK, Sigma, Suricata or YARA must be used for interoperability.

To measure the effectiveness of such a feedback loop, KPIs should be tracked, such as the number of detection rules updated via CTI input, the time from CTI publication to detection rule deployment or the number of alerts that resulted in CTI pivoting or updates. However, such KPIs should not bring competitiveness among the teams. Defense is a team sport, it is a virtuous circle. Cooperation is key here, and should be a priority. For example, after a breach simulation, CTI and detection teams can co-author a report showing where intel inputs worked or failed across the kill chain.

V. How AI and enrichment pipelines reshape threat detection today

A. Diverse AI models power modern detection systems: From anomaly detection and behavior modeling to graph and sequence-based learning.

Diverse AI models have been developed to enhance and completely reshape today’s threat detection. Multiple research papers have emerged, giving insightful methods on how to detect anomalies in a network. Going over them all would be impossible, that’s why I have selected three models for this paper.

1. Anomaly detection with Autoencoders

Variational Autoencoders (VAEs) are a class of generative models designed to learn the underlying probability distribution of input data by compressing it into a latent space and then reconstructing it with minimal error. In the context of cybersecurity, VAEs are especially effective for anomaly detection because they are trained exclusively on normal behavior, meaning deviations in reconstruction reflect potential threats. According to Nguyen et al. (2019) in their GEE: Gradient-based Explainable VAE framework, "VAE models demonstrate the capacity to capture complex, non-linear patterns in network telemetry such as NetFlow," and are particularly adept at detecting spam, botnets, low-rate DoS, and stealthy scanning activity. These models use the reconstruction loss (typically mean squared error or binary cross-entropy) as a proxy for anomaly scoring, when new data deviates significantly from the training norm, the error spikes, flagging potential threats. GEE also added gradient-based explanation features to help visualize which input dimensions caused the anomaly score, allowing analysts to validate results with transparency.

Another implementation by Yukta et al. applied a CNN-augmented VAE on the NSL-KDD dataset, achieving 81.1\% accuracy and 82.7\% average precision, outperforming traditional methods like k-NN. Here, the convolutional layers helped capture sequential and spatial dependencies in packet metadata, further improving fidelity. Meanwhile, research from Nokia Bell Labs (Monshizadeh et al., 2021) explored Conditional VAEs (CVAE) that embed class labels into the latent space during training.

This architecture, when combined with a downstream Random Forest classifier, enhanced data generalization and detection of subtle attacks, helping avoid the overfitting common in classic anomaly detection systems. Concrete examples of threats detected via VAEs include zero-day malware command-and-control traffic (via unseen domain generation algorithms), abnormal privilege escalation patterns in logs, or sudden lateral movement sequences in internal systems. However, VAEs are not without limitations.

As highlighted in GEE, they may struggle when the training data is not sufficiently clean, or when anomalies closely resemble legitimate rare events — resulting in false negatives. Moreover, as VAEs optimize for reconstruction fidelity, not explicit classification, their detection thresholds must be carefully tuned, often requiring human-in-the-loop validation. Lastly, VAEs are data-hungry: poorly sampled baselines can lead to unstable latent representations. Nonetheless, when trained and integrated properly, VAEs form a powerful backbone for modern detection pipelines.

2. Temporal Convolutional Network (TCN) with Bidirectional GRU

Behavior modeling with sequence-based learning, particularly using TCN-BiGRU architectures, operates by transforming raw sequential data, such as opcode streams, system calls, or command logs, into rich representations that capture the underlying execution patterns of software or user behavior over time.

The process starts with preprocessing: raw inputs like opcode sequences are first tokenized and encoded into numerical formats, often as fixed-length vectors or embeddings, which preserve order and semantic meaning. These sequences are then fed into the Temporal Convolutional Network (TCN) layers, which apply causal convolutions with dilation. Unlike traditional convolutions, TCNs use dilated filters that skip certain time steps, enabling the model to “see” far back into the sequence efficiently without losing resolution. This structure helps TCNs capture long-range dependencies and temporal relationships critical to modeling complex behaviors spanning many operations.

The TCN outputs a set of feature maps summarizing key temporal signals across the input sequence, which are then passed to the Bidirectional Gated Recurrent Unit (BiGRU) layers. BiGRUs process the sequence in two directions, forward and backward, allowing the model to incorporate both past and future context at every step. This bidirectionality is particularly useful for behavioral modeling because it helps the system understand patterns that depend on the sequence’s overall shape rather than just preceding events, such as multi-step malware routines or command sequences that depend on both prior and subsequent instructions.

Training these models typically involves supervised learning with large, labeled datasets that contain sequences tagged as malicious or benign, or even annotated by specific malware families or attack types. The model learns to optimize a loss function (e.g., cross-entropy for classification) that measures the difference between predicted and actual labels. Due to the sequence length and model complexity, training often requires GPUs and careful tuning of hyperparameters like convolutional filter size, dilation rates, number of BiGRU units, and learning rates.

Once trained, the model can be deployed in detection pipelines where real-time or batch sequences from network telemetry, process logs, or command history are continuously analyzed. For instance, when new opcode sequences are observed, the model evaluates whether they resemble known malicious patterns or represent anomalous behavior deviating from baseline profiles. This capability enables early detection of malware payload execution, script-based attacks, or even stealthy lateral movements that unfold over time.

Despite these strengths, the approach depends heavily on the quality and quantity of labeled training data and suffers from reduced interpretability, as deep models like TCN-BiGRU operate as “black boxes,” making it harder for analysts to understand why a sequence was flagged. Additionally, attacker techniques evolve rapidly, so models require frequent retraining with fresh data to maintain effectiveness. Computational demands also pose challenges for real-time deployment in constrained environments.

In summary, TCN-BiGRU models excel at extracting nuanced temporal features from sequential cyber telemetry, combining convolutional filters for broad temporal context with recurrent layers for detailed sequence analysis, enabling highly accurate behavior-based threat detection in complex operational settings.

3. Graph-Based Learning with GNN

Graph-based learning using Graph Neural Networks (GNNs) represents a cutting-edge approach to threat detection by leveraging the relational structure of cybersecurity data, such as hosts, processes, network flows, and user activities, modeled as interconnected nodes and edges within a graph. Unlike traditional sequence or tabular models, GNNs explicitly exploit the topology and heterogeneous relationships present in these graphs, enabling a deep understanding of how entities interact over time and across the network. In practice, each node in the graph might represent a host, a running process, a network connection, or a user account, while edges capture relationships like process spawning, network communication, or file access. The GNN then iteratively aggregates and transforms feature information from each node’s neighborhood, meaning its connected nodes and edges, learning embeddings that capture not only the attributes of each entity but also their structural context.

For instance, models like Anomal-E leverage self-supervised GNN architectures to detect intrusions without requiring labeled data. By constructing graphs from flow-based telemetry and learning to predict missing or altered graph components, Anomal-E identifies anomalous structures indicative of attacks. This approach dramatically reduces dependency on labeled datasets, a common bottleneck in cyber detection, while maintaining robust detection of previously unseen threats. Another notable example is the use of heterogeneous system provenance graphs, where different types of nodes (processes, files, network sockets) and edges (file reads, process spawns, network sends) are integrated into a single graph representation. Studies have demonstrated that graph-based learning models operating on such provenance data can detect complex multi-stage attacks, such as lateral movement and command-and-control (C2) chaining, with significantly higher accuracy and lower false positive rates compared to traditional rule-based or signature methods.

The power of GNNs in cybersecurity lies in their ability to recognize malicious clusters and anomalous graph patterns that reflect coordinated attacker behavior. For example, lateral movement within a network manifests as a particular pattern of process creations and network connections hopping across hosts, which GNNs can learn to detect even when individual events appear benign in isolation. Similarly, C2 communication chains, often disguised in legitimate traffic, create structural signatures in the graph that stand out once encoded by the GNN’s learned embeddings.

Despite their promise, deploying GNNs for threat detection involves challenges. Graph construction from raw telemetry can be computationally expensive and requires sophisticated feature engineering to encode node and edge attributes meaningfully. The models can be resource-intensive to train and infer, especially on large enterprise-scale graphs with millions of nodes and edges. Additionally, the complexity of GNNs poses interpretability issues, making it difficult for security analysts to trace why certain entities were flagged. Finally, since attackers constantly evolve tactics, continuous updates to the graph data and model retraining are necessary to maintain detection effectiveness.

In summary, GNN-based learning offers a powerful framework to model the rich, interconnected cyber environment, uncovering stealthy, multi-step attacks through structural anomaly detection and relational reasoning that outperforms many classical methods. By encoding hosts, processes, and flows as graph components, GNNs provide a holistic view of attacker behavior, making them a critical technology in next-generation threat detection systems.

B. Contextual enrichment enhances detection depth: NLP, scoring engines, and auto-tagging add structure and relevance to raw signals.

Contextual enrichment significantly deepens the effectiveness of threat detection by transforming raw alerts and telemetry into structured, meaningful insights that are easier to analyze and act upon. Natural Language Processing (NLP) techniques play a crucial role by extracting relevant entities, relationships, and intent from unstructured data sources such as threat intelligence reports, incident descriptions, or security logs. For example, NLP can automatically identify IoCs, threat actor names, malware families, or targeted assets embedded within text, enabling automated tagging and categorization that enrich alerts with valuable context. Scoring engines further refine this enrichment by assigning risk or severity scores based on factors like indicator freshness, confidence levels, and historical impact, helping analysts prioritize their investigation efforts more effectively. Auto-tagging systems leverage both NLP outputs and scoring metrics to label alerts with attributes such as attack techniques (e.g., MITRE ATT\&CK tactics), affected sectors, or geographical relevance, which supports faster correlation and filtering within SOCs.

Together, these contextual enrichment processes convert noisy, fragmented signals into actionable intelligence, reduce alert fatigue, and empower detection systems to focus on the most relevant threats. However, challenges remain in maintaining the accuracy and timeliness of enrichment, as well as in integrating diverse data sources without overwhelming analysts with false correlations. Nonetheless, by adding semantic structure and prioritized relevance to raw detection data, contextual enrichment acts as a force multiplier, enabling security teams to respond with greater precision and speed in an increasingly complex threat landscape.

C. Operational impact: smarter, faster, and broader: Reduced alert fatigue, accelerated triage, and multilingual threat intelligence integration.

Operational impact of advanced detection technologies manifests in smarter, faster, and broader security responses that dramatically improve how organizations manage and mitigate threats. By reducing alert fatigue through more accurate and context-rich detection, security teams can focus on genuine threats rather than being overwhelmed by false positives or low-priority notifications. This precision is often achieved through machine learning models and enrichment pipelines that filter out noise and surface high-fidelity alerts, enabling analysts to prioritize effectively. Accelerated triage is another critical benefit: automated classification, scoring, and correlation of alerts speed up initial investigation phases, cutting down mean time to detect (MTTD) and mean time to respond (MTTR).

For example, integration of Natural Language Processing (NLP) tools allows SOC analysts to quickly parse threat intelligence in multiple languages, breaking down barriers posed by globalized attacker activity and enabling access to a wider pool of actionable intelligence. Multilingual threat intelligence integration ensures that emerging threats from diverse regions are detected and understood without delay, giving defenders a more comprehensive and timely picture of the threat landscape.

Together, these operational improvements empower security teams to act more decisively and efficiently across their entire attack surface, enhancing overall resilience without increasing manpower. The challenges lie in seamless integration of diverse tools and maintaining real-time processing at scale, but the gains in responsiveness and threat coverage make these investments essential in today’s fast-evolving cybersecurity environment.

D. Ongoing challenges and active research: Addressing data bias, adversarial evasion, model transparency, and pipeline complexity at scale.

Ongoing challenges and active research in advanced threat detection focus heavily on addressing critical issues such as data bias, adversarial evasion, model transparency, and managing pipeline complexity at scale. Data bias remains a pervasive problem, as training datasets often reflect historical or environment-specific patterns that may not generalize well, causing models to miss novel or underrepresented threats. Researchers are actively exploring techniques like data augmentation and domain adaptation to mitigate this issue, but it remains a major hurdle for reliable detection. Adversarial evasion is another significant challenge, attackers increasingly craft inputs designed to deceive machine learning models, whether by manipulating features in network traffic or injecting subtle noise into logs. Defenses such as adversarial training and robust feature extraction are under development but often trade off detection accuracy or computational cost. Model transparency and explainability also present difficulties, complex architectures like deep neural networks and graph-based models can behave as “black boxes,” making it hard for analysts to understand why certain alerts are raised or to trust automated decisions fully. This has led to a growing research emphasis on explainable AI methods to provide interpretable outputs without sacrificing performance.

Finally, the operational complexity of integrating diverse AI models and enrichment pipelines at scale poses logistical and engineering challenges. Coordinating real-time data flows, ensuring interoperability across multiple platforms, and maintaining system robustness under heavy load require sophisticated infrastructure and ongoing tuning. Despite these obstacles, active research continues to push the boundaries, aiming to build detection systems that are not only more accurate and resilient but also trustworthy and scalable in real-world cybersecurity environments.

Conclusion

We have moved beyond a simplistic collection of indicators toward a dynamic, intelligence-driven approach that learns attacker intent and adapts in real time. By leveraging advanced AI models alongside rich contextual enrichment, incorporating NLP, scoring engines, and auto-tagging, security teams can reduce alert fatigue, accelerate triage, and respond to threats with unprecedented precision and speed. This evolution is more than a technological upgrade; it’s a cultural shift toward collaboration and continuous feedback between CTI and detection teams, transforming raw signals into actionable intelligence. While challenges like data bias, adversarial evasion, and model transparency persist, ongoing research and innovation are steadily overcoming these hurdles. As cybersecurity expert Bruce Schneier puts it, “Security is a process, not a product,” and today’s detection systems embody this philosophy by enabling a dance of signals, context, and clarity, helping defenders finally learn the rhythm of modern threats

Comments

You must log in to comment.

No comments yet — be the first to share your thoughts!